Storage & compute: key ML ingredients

Lots and lots of compute!

Over the past two articles we covered the various activities involved with data collection and storage. These were part of a 3-step process that is outlined below. We now reach the final step, which is concerned with using the data we collected and stored.

Using data is a function of “doing something” with it, which for the sake of this particular post will be scoped to using the data to build AI models. To build these models we need data, which we’ve covered how to get and store, and more importantly computational resources to train models using this data.

AI models are predominantly trained using GPUs. GPUs are the defacto standard for training models as they can process multiple computations simultaneously. They have a large number of cores, which allows for better computation of multiple parallel processes, a trait that ML models can benefit from tremendously.

There are a few important constraints to keep in mind in regards to GPUs.

First, they are also quite expensive, with one vendor (Nvidia) having substantial market share. If you’re going to be building AI models, odds are you will be using Nvidia GPUs. Second, you will want to have your compute in close proximity to your data. There’s no point in having expensive GPUs idle waiting on I/O requests. Third, it is not unusual to see training times measured in days or weeks. The time it takes to train models depends on the model’s architecture, the more sophisticated it is, the longer it will take to train, the amount of data and computational power available for training.

You might recall from my previous article that our storage medium of choice was S3 and Redshift, both of which are hosted on AWS. It therefore, makes sense to use GPU instances running on AWS. The thing is, these can be quite pricey, especially if you want to train models 24/7. The EC2 on-demand pricing for GPU instances in US East/N. Virginia ranges from ~$0.50/hr to ~$32/hr.

In my experience relying entirely on cloud-based GPU instances can be very cost prohibitive, you’re better off paying for on-premises GPUs, like an Nvidia DGX, and bursting to the cloud if you need more computational power. It is worth noting, that the major cloud providers are building their own ASICs and offering those at substantially lower prices than Nvidia powered GPUs. For example, AWS offers Inferentia instances (Inf1) and plans to complement those with a newer models like Trainium.

One additional twist to the tale. You will not want expensive GPUs idly sitting around either waiting for I/O or underutilized. You also don’t want you ML team in the business of trying to figure out how to schedule ML jobs to run against a cluster of GPUs. This is where MLOPs engines like Polyaxon come into play. There are numerous alternatives to Polyaxon, some offered by cloud providers like AWS Sagemaker and others like Algorithmia. At Kheiron we opted for Polyaxon due to its extensive feature set and ability to run on-premises and in the cloud. Polyaxon also offers a managed service offering as well.

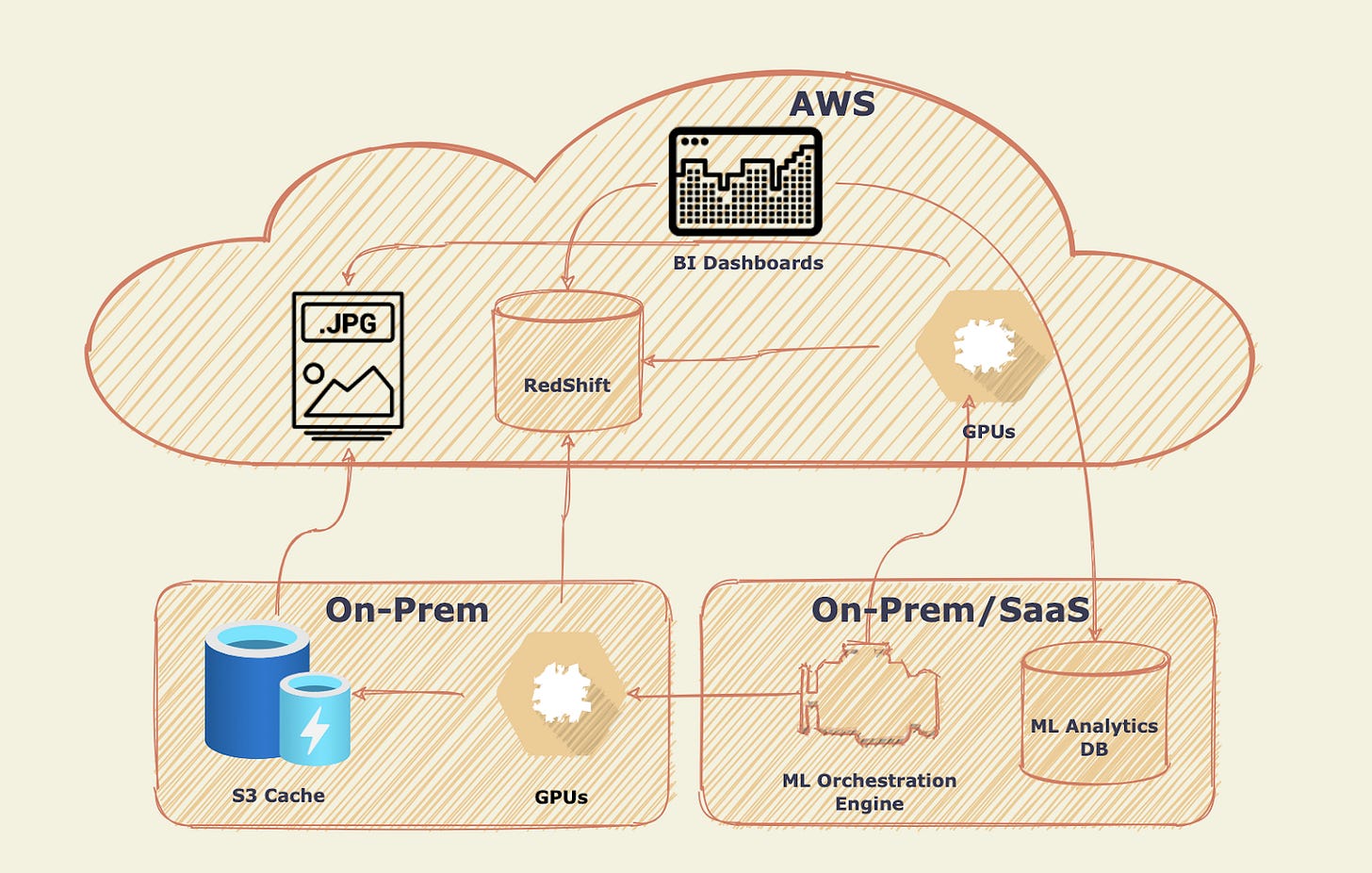

With all that being laid out, our compute & storage architecture is illustrated below. At the top of the stack you see our storage layer, which is comprised of S3 (images) and Redshift for clinical data and other metadata. We also utilize GPU instances available on AWS, if and when we need to burst beyond our available on-premises capacity.

The vast majority of our computational cluster is on-premises (Nvidia DGX). To reduce the I/O latency between our on-premises infrastructure and the storage hosted on AWS we utilize S3 caches like Minio. The storage medium for our cache is fast NVMe SSDs. Our MLOPs platform of choice is Polyaxon which we use extensively to assign GPU resources on which ML jobs can run on. These resources default to our on-premises GPUs, but we are also able to burst by firing up GPU instances on AWS. When we do that, we can read directly from S3 without going through a caching layer. Polyaxon offers a lot more than just the ability to manage and schedule a large compute cluster. One of its numerous benefits is keeping track of lots of historical data about the models it runs on a local Postgres database.

Finally, we leverage BI tools to assess the relationship between our training data, stored in S3 and Redshift with model performance. The latter being stored in Polyaxon. There are numerous questions we would like to answer from this analysis. While we obviously care about the overall performance of our models, we are also interested in understanding the distributions of the training data set. For example, if our dataset is biased towards a certain age group e.g. women under the age of 45, then our models might not perform well enough if they encounter data outside the training distribution, in this case women older than 45. We care about the distribution of our training data to understand the overall risk profile of our models and how well those can generalize across a much wider distribution. This analysis is also invaluable in helping us figure out gaps in our training data. The bigger the overlap between our training data and the overall data (i.e. population) distribution the better the model will generalize. Model generalization will be a topic of another post. It was briefly touched on in an article but I will be fleshing it out more in a future post.

If you’re enjoying this, please share my newsletter with someone you think will enjoy it too.👇🏽

Photo by Vitor Pinto on Unsplash