Beware of transitions in incremental software development

With some maths too

One of the tenets of agile software development is incremental and interactive development. The general idea behind this approach is to approach software development as a series of iterations during which a software development team continuously improves the state of the software, whilst also getting feedback from customers as they release incremental versions of the software. You might have seen this approach popularized by the diagram below.

Each iteration evolves the state of the software and adds more functionality to it. This over time increases the utility and overall functionality of the software. In the example above, we start with a skateboard and end up after a few iterations with a car. There’s nothing wrong with this approach and it is one which I am in general a big fan of.

However, there are situations where this approach can have unintended negative consequences on your customers. The most common situation where I have seen this incremental mode cause customer pain is during the transitions.

In the diagram above, you start off by giving your customers a skateboard, which evolves to become a scooter then a bicycle. The question is how did the product evolve between transitions?

Did the skateboard somehow magically turn into a scooter, or did the customer have to throw away the stake board and replace it with the scooter? I realize the diagram above is hypothetical and that these transitions are meant to be handled - silently - by the software without much fuss on the customer’s side. The thing is, sometimes they aren’t.

Let me explain.

A few years ago I was working on a distributed file-system. One of the key properties of a file-system is data protection. File-systems, especially enterprise-grade ones, should not lose data. There are many methods for data protections but I will very briefly cover two: mirroring and erasure coding. I’ll briefly cover both of these approaches, but in case you want to skip the TL;DR; below will do

TL;DR; Mirroring is easy to build, but wasteful. Erasure Coding is complex to build but a lot more efficient. Mirroring is the skateboard. Erase Coding is the car

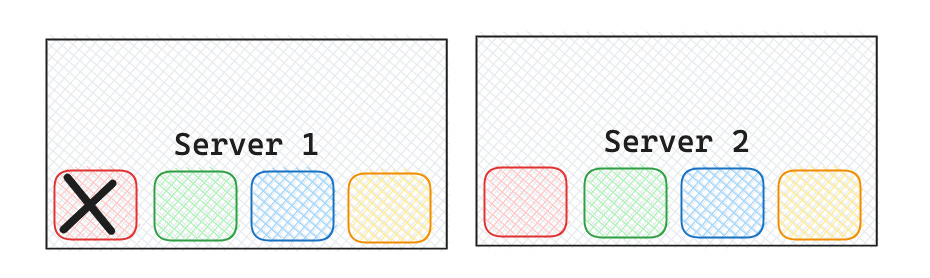

Mirroring is the simplest and easiest concept to work with. Under this mode, a file-system mirrors data that it stores across more than 1 device. For example, a file-system might write each block twice on different physical devices for redundancy's sake. If one device fails, the file-system can still retrieve data from the remaining mirror(s). Consider the disturbed file-system illustrated below, which is deployed across 4 servers. Each server contains 4 disks, which are mirrored across two servers. Each disk on server 1 is mirrored to another on server 2 and vice versa. The same relationship applies between servers 3 and 4. Note, mirroring typically operates at the block level and not disk - my example is illustrative and very simple.

Under this protection mode, if a disk is lost the data can still be recovered from its mirror, as illustrated in the diagram below. Even though disk 1 on server 1 is lost, the data that was stored on it is intact and can be retrieved from its mirror on server 2.

There’s one main problem with this approach. It’s wasteful. A customer that deploys a file-system that operates under a mirroring data protection mode will only be able to use 50% of the storage the file-system is deployed on. You can extend the mirroring to more than 2x, you can write each block/file 3x, 4x or Nx times. It really depends on the data loss risks that you are able to tolerate.

Erasure coding, which is a bit more complex, works by breaking data into small chunks and distributing those chunks across multiple storage devices. The key is that erasure coding only needs a subset of those chunks to be able to rebuild the original data. For example, EC(5,3), which is a very common configuration in distributed storage systems works like so:

5 - This is the total number of coded chunks that each datum (e.g. file/block) is split and encoded into.

3 - This is the minimum number of chunks required to reconstruct the original file data.

So in this example with (5,3) erasure coding, a file/block is split into smaller chunks (often 4KB-16KB in size). Those chunks are encoded into 5 total coded chunks using mathematical encoding algorithms. The 5 coded chunks are distributed and stored across different storage devices. If up to 2 chunks are lost or corrupted, the original file can still be rebuilt from any 3 of the remaining chunks. This provides an efficient storage overhead of only 1.67x (5/3) compared to 2x for traditional mirroring, or 3x for the more common triple mirroring mode.

Here’s a very simple example of how EC works. Let’s say I want to represent the integer 63 as xy, where x=6 and y=3. I can encode the datum (63) like so:

x + y = 9

x - y = 3

2x + y = 15

I only need two of these equations to solve for x and y. I can tolerate losing any one of these three and will still be able to retrieve my data (x and y).

With that out of way, let’s get back to the main topic: incremental delivery.

Because mirroring is easier to do, we opted to deliver the first iteration of our data protection with that mode. It was relatively quick to get the feature out and provide guarantees to our customers that their data is protected, or that the risk of losing data is tolerable. In the meantime we started to work on EC, which was the desired end-state. We released the stake-board, but our goal was to ultimately deliver a car.

There’s a big caveat with this approach though. How do you migrate customers who are using mirrored data protection to erasure codes? How do customers move from the skateboard to the car?

There are a few options here, I’ll outline two of them. The first is for the software to convert all blocks from mirrored to EC. The second is to require customers to migrate their data from their existing file-system to a new one that is running with EC as the default. The first option requires a substantial software development effort (it’s a hard problem - will skip details). The second option requires substantial effort from the customer.

We opted for the migration approach.

There were a few consequences for this approach. The first was it required that the software support both modes: mirrored and erasure codes. The software needed to support both modes until all customers were running on one mode: EC. That took a long time, which increased the support and testing burden. The second was customer frustration. Customers do not want to deal with a data migration (a dirty word in the data & storage space), that’s a cumbersome and very taxing problem for them to undertake.

The adoption curves for both modes is illustrated below. In reality the adoption isn’t truly linear, especially for moving away from mirrored. That curve had a very long tail due to customers pushing back on the move and delaying the migration.

I realize that this is a bit of an edge case. Not every feature requires that significant effort in its transitions, but a fair bit of enterprise features do. The point is, when thinking of incremental delivery you absolutely have to think about how your customers will transition between two states. If the transition is not seamless, then expect to witness some of the challenges I mentioned here.

Separately, and this is very true in enterprise software, the biggest and most painful transition of them all is moving from an incumbent product to a new one. Hence, why incumbents always have an advantage. The easy button is to just renew versus plan for migrating to a completely new product.

The easier you enable transitions, both at the outset of purchasing a product, or during the customer’s lifecycle, the better off for you and your customers.

Things of interest this week

Its pretty uncommon to see a public company berate another, but well, Cloudflare just did that to Okta. The blog title alone sets the tone: “How Cloudflare mitigated yet another Okta compromise” In fairness, Okta hasn’t been having a great time lately with a breach of its customer support unit.

A few weeks ago I wrote about the perils of the macro and monetary environment and its impact on startups. This past week saw the very sad demise of Convoy. I fear we will witness more later stage startups shutting down over the next 12+ months.

Are we on the cusp of a new - open and modular - data platform? I believe we are and am excited about advances in this space. More about the 6th data platform here